What have our brains ever done for us?

There are some voices in the futurology/singularity/pie-in-the-sky business that are saying, sometime soon we will have electronic devices that will exceed the computing power of the human brain. The reasoning goes, when that happens, AI will really take off and the Singularity would finally occur, as intelligent machines will find ever more ingenious ways to increase their computing power. All this is supposed to start, remember, because your laptop, or whatever you'll have in about ten years, will have more computing power than you.

I wonder though where the laptop, or whatever you're having, doesn't already exceed your computing power, in a way. Let me explain.

First, I must say that I am aware of what robots can and can't do (well). I have worked at the DLR Institute of Robotics and Mechatronics, and while my task was related to sensor fusion in the medical group, I could see the work done on the Justin platform, which is a robot with two arms and various sensors. It needed several computers, all chugging along at max power, to provide the accurate, precise control for the arms and process the data from the sensors. I daresay Justin's arms are probably better, control-wise, than those of any human, but the software behind them is monstrously complex and fairly resource hungry. It's a safe bet that the computers needed to control Justin use a lot more than the 20-40W estimated for the human brain. Both the AMD Phenom and Intel Core i7 processors need 65W, for example.

If DLR is the best (I think) for arms, Boston Dynamics is the best at legged locomotion. Their Big Dog has made quite a splash at launch, and their videos of the running cheetah robot are quite impressive as well, if you have any idea for how tricky it is to get accurate control for something that nevertheless seems so second nature to you or me.

But that's just it- those things are second nature to you, me, and any mammal. Indeed, any animal. And while I'm fairly certain that the ability of human beings to do object recognition surpasses that of any other organism on Earth (possibly because of language?), visual processing and object recognition is also something that many animals must be fairly proficient in. If it's not image processing, then other sensors, like echolocation or even smell, offer a wealth of data to analyze.

Bottom line, many, if not all, of the things that humans can do well, and robots cannot, or cannot do as efficiently, are things we share with all the other simpler brains that exist.

Meanwhile, human long-term memory is notoriously fickle and subject to alteration. There are strong limits to what a human being can recall of a sequence and how many levels can be distinguished in a unidimensional variable (as described in the paper The Magical number 7, plus or minus 2). The Wason selection task proves that we're not that good at basic reasoning, and we are notoriously bad at handling probabilities.

The picture emerging from all this is that our minds are not quite as quick and astute as we give ourselves credit for. Case in point, circuitry for reasoning about social situations, which works well, isn't coopted, or isn't properly coopted, for abstract reasoning tasks that are, from a logical perspective, equivalent (as the Wason selection task shows). We can become magically proficient with practice; it's been only recently that computers finally could beat human chess grandmasters. But what the human player is doing, isn't really analyzing millions of positions like a computer might. There are some theories about what's going on, resulting, surprise surprise, from that Magical Number 7 paper and its focus on chunking, and what it implies for how memory is organized to store and recognize larger patterns. We seem very good at that, but it takes us a while to learn and form the patterns, recall is imperfect, and is liable to decay unless used often.

And, finally and obviously, a computer can compute much faster than any human. Including calculating savants; for a big enough arithmetic problem, computer wins.

So one wonders whether comparing computing power of processors today, with some hypothetical and likely bullshit estimate of the brain's computing power, has any value. It appears as if brains are very good at certain tasks, which may skew such estimates one way, while simultaneously being not so good at others.

One also wonders whether in robotics we aren't trying, in a way, to hammer a nail with a wet fish. Specifically, I'm talking about control of systems here. Let's pick a simple application, where a proportional-integral-derivative controller is used to control a linear system. The algorithm is fairly simple; you are given an input signal, which represents the difference between where you are and where you want to be. Since the system you are controlling is linear, you can adjust the control you apply by a value that includes a part proportional to the error, another with its derivative, and another with its integral. It's not the scope of this post to explain why that works. Just notice that there are at least two approaches.

One, you can use a microcontroller. That's a mini-microprocessor, which contains thousands to millions of transistors, and is what's done most commonly today.

OR, two, you can use one operational amplifier, two capacitors, and two resistors. The opamp may contain three, five, ten transistors- certainly orders of magnitude fewer than a controller.

Analog multiplication is also possible using specialized circuits, which also only need one or two handfuls of transistors.

On the other hand, some interesting complications of the narrative notwithstanding, error correction, data transmission, and data storage all have a strong digital component. One sends analog signals corrupted by analog noise, of course, but in the end what one usually wants is to recognize discrete, digital levels in those signals.

Besides, digital electronics has proven very adaptable. It is also more resilient to imperfections like noise. One can say that a real digital computer is closer to an ideal digital computer than a real analog is to an ideal analog (analog multipliers are very susceptible to noise, for example). Which is why most research done today- most, not all- is done with digital computers.

My guess is that some of the things that animal brains are very efficient at are a result of clever use of analog circuitry. I'd further guess that whenever the brain tries to go digital, which includes things like reasoning, symbolic processing and the like, it places itself in the 'hammer a nail with a wet fish' situation. Ultimately it's not a question of more computing power; it's a question of either using the right hardware for the job, or finding the programming to efficiently complete certain tasks. Probably both.

I'm getting more interested in these chunking models of human memory, incidentally. Human memory is not a database. It appears that acquiring new facts mixes them with the old, somehow, with interesting results as far as reasoning, planning, creativity and so on are involved. As a pure storage mechanism, memory, well, sucks. But maybe its malleability is a feature, not a bug. There's some research in this area that needs looking into ...

I wonder though where the laptop, or whatever you're having, doesn't already exceed your computing power, in a way. Let me explain.

First, I must say that I am aware of what robots can and can't do (well). I have worked at the DLR Institute of Robotics and Mechatronics, and while my task was related to sensor fusion in the medical group, I could see the work done on the Justin platform, which is a robot with two arms and various sensors. It needed several computers, all chugging along at max power, to provide the accurate, precise control for the arms and process the data from the sensors. I daresay Justin's arms are probably better, control-wise, than those of any human, but the software behind them is monstrously complex and fairly resource hungry. It's a safe bet that the computers needed to control Justin use a lot more than the 20-40W estimated for the human brain. Both the AMD Phenom and Intel Core i7 processors need 65W, for example.

If DLR is the best (I think) for arms, Boston Dynamics is the best at legged locomotion. Their Big Dog has made quite a splash at launch, and their videos of the running cheetah robot are quite impressive as well, if you have any idea for how tricky it is to get accurate control for something that nevertheless seems so second nature to you or me.

But that's just it- those things are second nature to you, me, and any mammal. Indeed, any animal. And while I'm fairly certain that the ability of human beings to do object recognition surpasses that of any other organism on Earth (possibly because of language?), visual processing and object recognition is also something that many animals must be fairly proficient in. If it's not image processing, then other sensors, like echolocation or even smell, offer a wealth of data to analyze.

Bottom line, many, if not all, of the things that humans can do well, and robots cannot, or cannot do as efficiently, are things we share with all the other simpler brains that exist.

Meanwhile, human long-term memory is notoriously fickle and subject to alteration. There are strong limits to what a human being can recall of a sequence and how many levels can be distinguished in a unidimensional variable (as described in the paper The Magical number 7, plus or minus 2). The Wason selection task proves that we're not that good at basic reasoning, and we are notoriously bad at handling probabilities.

The picture emerging from all this is that our minds are not quite as quick and astute as we give ourselves credit for. Case in point, circuitry for reasoning about social situations, which works well, isn't coopted, or isn't properly coopted, for abstract reasoning tasks that are, from a logical perspective, equivalent (as the Wason selection task shows). We can become magically proficient with practice; it's been only recently that computers finally could beat human chess grandmasters. But what the human player is doing, isn't really analyzing millions of positions like a computer might. There are some theories about what's going on, resulting, surprise surprise, from that Magical Number 7 paper and its focus on chunking, and what it implies for how memory is organized to store and recognize larger patterns. We seem very good at that, but it takes us a while to learn and form the patterns, recall is imperfect, and is liable to decay unless used often.

And, finally and obviously, a computer can compute much faster than any human. Including calculating savants; for a big enough arithmetic problem, computer wins.

So one wonders whether comparing computing power of processors today, with some hypothetical and likely bullshit estimate of the brain's computing power, has any value. It appears as if brains are very good at certain tasks, which may skew such estimates one way, while simultaneously being not so good at others.

One also wonders whether in robotics we aren't trying, in a way, to hammer a nail with a wet fish. Specifically, I'm talking about control of systems here. Let's pick a simple application, where a proportional-integral-derivative controller is used to control a linear system. The algorithm is fairly simple; you are given an input signal, which represents the difference between where you are and where you want to be. Since the system you are controlling is linear, you can adjust the control you apply by a value that includes a part proportional to the error, another with its derivative, and another with its integral. It's not the scope of this post to explain why that works. Just notice that there are at least two approaches.

One, you can use a microcontroller. That's a mini-microprocessor, which contains thousands to millions of transistors, and is what's done most commonly today.

OR, two, you can use one operational amplifier, two capacitors, and two resistors. The opamp may contain three, five, ten transistors- certainly orders of magnitude fewer than a controller.

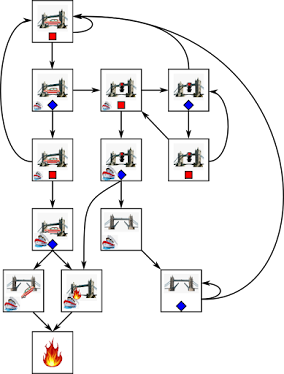

|

| Logarithmic and exponential computation with op-amps. Upper figure is from wikipedia and is licensed as public domain. |

On the other hand, some interesting complications of the narrative notwithstanding, error correction, data transmission, and data storage all have a strong digital component. One sends analog signals corrupted by analog noise, of course, but in the end what one usually wants is to recognize discrete, digital levels in those signals.

Besides, digital electronics has proven very adaptable. It is also more resilient to imperfections like noise. One can say that a real digital computer is closer to an ideal digital computer than a real analog is to an ideal analog (analog multipliers are very susceptible to noise, for example). Which is why most research done today- most, not all- is done with digital computers.

My guess is that some of the things that animal brains are very efficient at are a result of clever use of analog circuitry. I'd further guess that whenever the brain tries to go digital, which includes things like reasoning, symbolic processing and the like, it places itself in the 'hammer a nail with a wet fish' situation. Ultimately it's not a question of more computing power; it's a question of either using the right hardware for the job, or finding the programming to efficiently complete certain tasks. Probably both.

I'm getting more interested in these chunking models of human memory, incidentally. Human memory is not a database. It appears that acquiring new facts mixes them with the old, somehow, with interesting results as far as reasoning, planning, creativity and so on are involved. As a pure storage mechanism, memory, well, sucks. But maybe its malleability is a feature, not a bug. There's some research in this area that needs looking into ...

Comments

Post a Comment