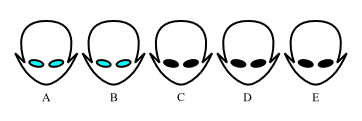

Here's a nifty gem of epistemic logic, a little puzzle that crops around in many forms. It appears at Terence Tao's blog , there's also an xkcd page about it , and there are several variations on it. Consider this one, simpler but complex enough to capture the subtlety: suppose there's a group of five aliens who are highly logical beings (whatever one can deduce, will be immediately deduced by that alien) but also rather quirky. None of them know the color of their own eyes, they don't speak with each other about eye colors, and they live where no reflective surfaces or such will ever tell them that information. So each alien knows only the colors of the others' eyes and not their own. If it matters, possible eye colors that the aliens know about are blue, green, red, black. As it happens, two out of the aliens in the group have blue eyes, and the remaining three have black eyes. A further quirk of these aliens: if ever one of them discovers the color of his/her