Sci-hype: peta-bytes of BS

I recently bumped into one of those estimations of the power of the human brain, the kind made to make us very proud of our hardware, here. So, apparently, says Paul Reber professor of psychology at Northwestern University, the brain has 2.5 petabytes of long-term storage available. To be fair, his article was mostly meant to reassure any worried readers that they'll never have their brain warn them about "low disk space". Putting a number on that capacity though is very dubious, and as someone with some experience in computer systems, I'll now explain why.

Reber proceeds at his estimation thusly. Suppose there are 1 billion neurons that are involved in fact-memories (he makes a good point here- not all neurons in the brain are likely to be used to that purpose). Typically, a neuron has a thousand or so connections to other neurons which-

Wait, what? Where did the terabyte or so capacity suddenly jump to 1000 terabytes, aka the petabyte order, just because of some magical pattern in memory storage? This is an important question that I'll return to later, but first an observation.

But why would we need it? One of the most cited papers in psychology is "The Magical number seven, plus or minus two", about the capacity of working memory. Said limits turn out to be very strict. Selection tasks indicate that the brain can handle deciding from among 8 alternatives just fine but any more and things get shaky; that's three bits that need deciding. Sequence recall indicates that working memory doesn't operate on bits, but has a limit of seven or so recognizable "items" (or "chunks" as they're called in the paper).

All attempts to put bit values on memory capacity are fairly misguided, I think, but there's a clear indication that there's not a wealth of resources for working memory, and the evidence for a vast store of long term memory is slim. Plus, it wouldn't be needed. We couldn't process an HD video stream of our lives to look for relevant information anyway. Unsurprisingly, our memory does not work like videotape.

What seems to happen is that people force a computer-like interpretation on the human brain, without bothering to go into the details of either. Here's another estimation, from "On Intelligence" by Jeff Hawkins:

And now finally I'll return to Reber's error, of magically inflating the estimation into the petabyte range, when the numbers he marshalled would suggest, if anything, a terabyte range (tops).

It's certainly plausible that the brain attempts to compress and/or mix memories together. This doesn't count as an answer to a question about capacity, any more than you'd accept a "but this USB stick can actually hold terabytes of data if you compress it" claim from a vendor. Yeah, some terabyte-long sequences could, maybe, be compressed to something to fit on that stick, but not all of them, and that's not what memory capacity means.

Reber appears to be confusing storage capacity with Kolmogorov complexity here. The idea of Kolmogorov complexity is that a sequence of data only contains as much information as the smallest number of bits needed to describe a program that would output that sequence. For example, you can take a (de)compression program like WinRar together with some archive to be the sequence of bits describing Kolmogorov's program, and whatever's the result from unpacking the archive is the output.

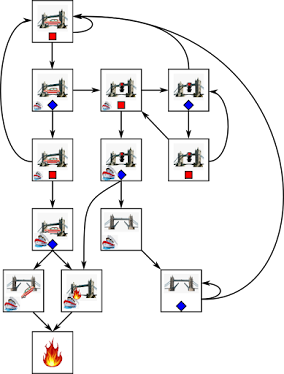

Intuitively this makes sense. Take the picture above; it describes a circuit containing 16000 or so storage cells, and drawing all of them on a schematic would take a lot of space. But why bother? All 16000 storage cells are mostly alike, so draw just one, then say "make 16000 of these". Done.

A program that can compute the decimals of PI is an extreme example. Such a program would run forever, producing an infinite sequence, while nonetheless being a finite-length program. Compression can be amazing. In fact, you could "run" this program in your head and, with patience and immortality, produce all the decimals of PI as if you were recalling them from memory. Does this mean you have infinite memory capacity? If yes, then the same would be valid for the computer running it.

Sadly it turns out to be impossible to find, given some arbitrary sequence, the shortest program that will generate it. Also, the longer the allowed bit count, the more possible bit sequences there are, so "most" long sequences do not compress. Not without "losses", which just means you insert errors to change a noncompressing sequence into one that does compress.

So as a measure of what you can actually store on some hardware (including your own brain), Kolmogorov complexity is not a useful, informative description. It doesn't make sense then to inflate the memory capacity of the brain by pointing out that it may compress some memories very efficiently.

So how large is the capacity of our long-term memory? Sorry. I have no idea. That's the only honest answer out there for now. However, rest assured, you will likely never experience a "disk full" error in your brain; or you'd be the first in history.

PS:

The wikipedia page on Kolmogorov complexity has a nice proof of why it's not possible to determine, in general, the shortest program that will output some given sequence.

But surely there must be a way, right? What if you just enumerated all programs until you bumped into the first that generated the sequence, or the first that's longer than the sequence?

Like attempts at the perpetuum mobile, these are delusions, but it's nice to see why they fail- and the talk page has an exchange dealing with exactly that. Basically, to check a program you need to run it, and not all programs terminate in finite time. Running all programs concurrently doesn't guarantee that the shortest one that can output a sequence will be the first to actually produce it.

Reber proceeds at his estimation thusly. Suppose there are 1 billion neurons that are involved in fact-memories (he makes a good point here- not all neurons in the brain are likely to be used to that purpose). Typically, a neuron has a thousand or so connections to other neurons which-

Paul Reber: amounting to more than a trillion connections. If each neuron could only help store a single memory, running out of space would be a problem. You might have only a few gigabytes of storage space, similar to the space in an iPod or a USB flash drive. Yet neurons combine so that each one helps with many memories at a time, exponentially increasing the brain’s memory storage capacity to something closer to around 2.5 petabytes (or a million gigabytes).

Wait, what? Where did the terabyte or so capacity suddenly jump to 1000 terabytes, aka the petabyte order, just because of some magical pattern in memory storage? This is an important question that I'll return to later, but first an observation.

Paul Reber: if your brain worked like a digital video recorder in a television, 2.5 petabytes would be enough to hold three million hours of TV shows. You would have to leave the TV running continuously for more than 300 years to use up all that storage.Think about that. Or, think of your favorite show. Do you remember how often a door was opened and closed in the episode from last week? I take it some people have amazing, eidetic memory (see the article though- it's not as impressive as it sounds), but they turn out to be very rare. And most of them use mnemonic tricks that are still far short of perfect recall. Imagine this, we could, with 2.5petabytes of memory, have an HD video of our entire lives inside our heads.

But why would we need it? One of the most cited papers in psychology is "The Magical number seven, plus or minus two", about the capacity of working memory. Said limits turn out to be very strict. Selection tasks indicate that the brain can handle deciding from among 8 alternatives just fine but any more and things get shaky; that's three bits that need deciding. Sequence recall indicates that working memory doesn't operate on bits, but has a limit of seven or so recognizable "items" (or "chunks" as they're called in the paper).

All attempts to put bit values on memory capacity are fairly misguided, I think, but there's a clear indication that there's not a wealth of resources for working memory, and the evidence for a vast store of long term memory is slim. Plus, it wouldn't be needed. We couldn't process an HD video stream of our lives to look for relevant information anyway. Unsurprisingly, our memory does not work like videotape.

What seems to happen is that people force a computer-like interpretation on the human brain, without bothering to go into the details of either. Here's another estimation, from "On Intelligence" by Jeff Hawkins:

Jeff Hawkins: Let's say the cortex has 32 trillion synapses. If we represented each synapse using only two bits (giving us four possible values per synapse) and each byte has eight bits (so one byte could represent four synapses), then we would need roughly 8 trillion bytes of memory.The error is assuming that the number of bits required to describe the current state of the system is indicative of the number of bits that the system can store. This number- assuming ALL possible states can be described to the best relevant precision- is an upper limit, of course, and the error Reber is making is forgetting this. However, just because a naive description of a dynamic RAMs chip surface needs lots of information to describe the sizes and shapes of the various materials making up the charge traps and accompanying transistors, and the contents of the charge traps in number of electrons, and the voltages applied to the transistors guarding the charge traps, doesn't mean that each charge trap stores any more than one bit, as far as the system using the RAM is concerned.

And now finally I'll return to Reber's error, of magically inflating the estimation into the petabyte range, when the numbers he marshalled would suggest, if anything, a terabyte range (tops).

It's certainly plausible that the brain attempts to compress and/or mix memories together. This doesn't count as an answer to a question about capacity, any more than you'd accept a "but this USB stick can actually hold terabytes of data if you compress it" claim from a vendor. Yeah, some terabyte-long sequences could, maybe, be compressed to something to fit on that stick, but not all of them, and that's not what memory capacity means.

Reber appears to be confusing storage capacity with Kolmogorov complexity here. The idea of Kolmogorov complexity is that a sequence of data only contains as much information as the smallest number of bits needed to describe a program that would output that sequence. For example, you can take a (de)compression program like WinRar together with some archive to be the sequence of bits describing Kolmogorov's program, and whatever's the result from unpacking the archive is the output.

Intuitively this makes sense. Take the picture above; it describes a circuit containing 16000 or so storage cells, and drawing all of them on a schematic would take a lot of space. But why bother? All 16000 storage cells are mostly alike, so draw just one, then say "make 16000 of these". Done.

A program that can compute the decimals of PI is an extreme example. Such a program would run forever, producing an infinite sequence, while nonetheless being a finite-length program. Compression can be amazing. In fact, you could "run" this program in your head and, with patience and immortality, produce all the decimals of PI as if you were recalling them from memory. Does this mean you have infinite memory capacity? If yes, then the same would be valid for the computer running it.

Sadly it turns out to be impossible to find, given some arbitrary sequence, the shortest program that will generate it. Also, the longer the allowed bit count, the more possible bit sequences there are, so "most" long sequences do not compress. Not without "losses", which just means you insert errors to change a noncompressing sequence into one that does compress.

So as a measure of what you can actually store on some hardware (including your own brain), Kolmogorov complexity is not a useful, informative description. It doesn't make sense then to inflate the memory capacity of the brain by pointing out that it may compress some memories very efficiently.

So how large is the capacity of our long-term memory? Sorry. I have no idea. That's the only honest answer out there for now. However, rest assured, you will likely never experience a "disk full" error in your brain; or you'd be the first in history.

PS:

The wikipedia page on Kolmogorov complexity has a nice proof of why it's not possible to determine, in general, the shortest program that will output some given sequence.

But surely there must be a way, right? What if you just enumerated all programs until you bumped into the first that generated the sequence, or the first that's longer than the sequence?

Like attempts at the perpetuum mobile, these are delusions, but it's nice to see why they fail- and the talk page has an exchange dealing with exactly that. Basically, to check a program you need to run it, and not all programs terminate in finite time. Running all programs concurrently doesn't guarantee that the shortest one that can output a sequence will be the first to actually produce it.

Comments

Post a Comment